We had the opportunity to host Bruce Liu, one of the authors of the Inner Thoughts paper, in our team’s AI learning session today. Sharing my key takeaways.

Key Takeaways

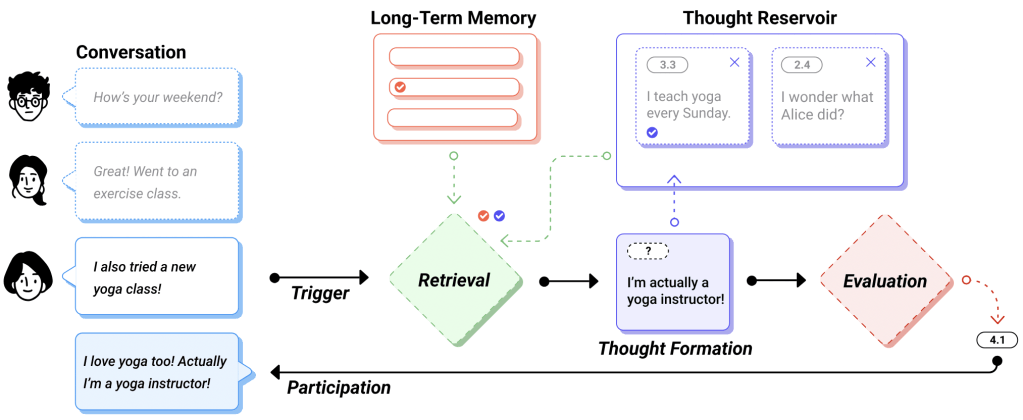

- Giving an agent a persona and having it run a continuous internal monologue leads to more natural participation in group conversations.

- The system generates multiple candidate thoughts, evaluates them on:

- relevance

- information gap

- impact

- appropriateness

… and only expresses a thought if motivation passes a threshold.

- This makes the agent selective, not reactive. It avoids over-speaking and feels socially aware.

- The authors fine-tuned GPT-3.5 on the MPC (Multiparty Chat Corpus) dataset to predict the next speaker, and prompt the model to generate response based on it’s persona if selected by the prediction. They compared the Inner Thoughts approach against this baseline.

- The overall loop is:

- Trigger – Initiating the thought process (when someone posts a message or silience threshold in this paper)

- Retrieval – Accessing relevant memories and context

- Thought Formation – Generating potential thoughts

- Evaluation – Assessing intrinsic motivation to express thoughts

- Participation – Deciding when and how to engage in conversation

- The important idea: Not every thought should be spoken.

- Another interesting idea was that they used different prompts to simulate System-1 vs System-2 thinking (thinking fast-and-slow) to generate thoughts.

- They use a simple developer-set probability to choose between fast System-1 thoughts and slower System-2 reasoning, but this idea opens the door to far more sophisticated, context-aware switching.

- The agent behaves more like a participant in the conversation, not a tool that gets invoked when @ mentioned.

- The code is clean and packaged well: https://github.com/xybruceliu/thoughtful-agents

- Actually, should be very tractable to use it inside a Teams SDK agent for Python.

Leave a comment