Last Friday, our learning session covered Context Rot, a paper from the Chroma vector database team on how longer inputs affect LLM performance.

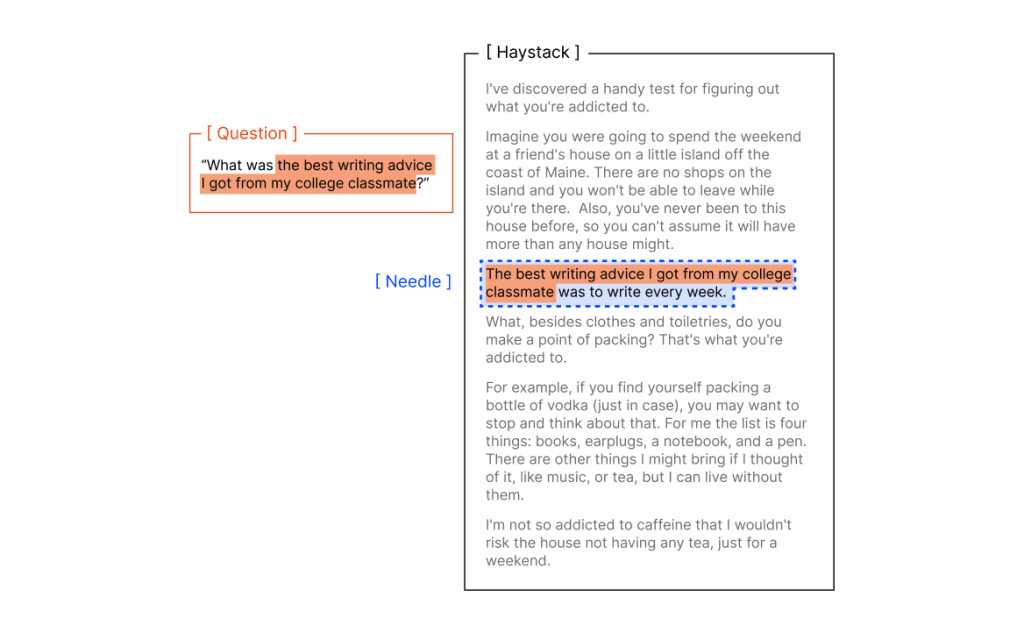

They ran experiments with 18 leading LLMs, like o3, GPT-4.1, Claude, Gemini, and Qwen, on needle-in-a-haystack style questions, then measured how often the models gave the right answer.

The best way to TLDR is to just watch this ~7 minute video on YouTube:

Here are the key takeaways:

- Longer context hurts: Don’t overload models with full reports or long histories. As irrelevant text piles up, even strong models miss answers. Keep inputs lean for reliable results.

- Clarity of the query matters: Vague questions get worse answers in long contexts. Since you can’t rely on users to always be precise, systems must rewrite queries, for example by rephrasing them into clearer forms, mapping them to structured intent, or combining them with retrieval to anchor the request.

- Distractors amplify errors: Models can be tricked by irrelevant but similar text. In compliance or legal reviews, this means they might confuse one clause for another. Systems must filter out look-alike noise.

- Use embeddings + keyword anchors together (semantic + lexical match).

- Enforce entity checks (IDs, dates, names must align).

- Apply re-ranking models to filter passages that look close but don’t directly answer.

- Train retrievers with negative samples (examples of near-duplicate but irrelevant text).

- Structure of irrelevant content matters: Clean, coherent irrelevant text is more distracting than random noise. That means polished background material can actually reduce accuracy if not filtered.

- Focused input beats full input: Retrieval layers or context filters that feed only what’s relevant improve both accuracy and cost. Businesses should invest in these instead of relying on raw long-context alone.

- Exact repetition breaks down: Researchers asked models to simply copy long blocks of text word-for-word. If a model can’t copy long sequences reliably, it can’t be trusted to surface exact details (IDs, contract terms, medical dosages). Retrieval workflows must include verification.

So the bigger question is: should you solve context-rot as an app-developer or wait for big-labs to solve?

Big labs will keep improving the physics of long context: better positional encodings, more efficient attention, training strategies that improve decay. But those fixes won’t handle your domain specifics: which clauses in a contract matter, which patient record fields are critical, or how to enforce compliance rules. That’s squarely on app / agent developers and those investments should be durable.

Retrieval layers, query normalization, and verification pipelines will remain useful even if models get better, because they enforce governance, and add trust, and cut costs.

What may become obsolete are low-level hacks like custom chunk sizes. So, right strategy seems like not to wait. Build domain-aware context engineering now, knowing labs will lift the floor while your systems enforce the ceiling.

One strategy is to win customers who care about context-rot being solved well today, even if some of that work gets thrown away as labs improve. Those early wins give you a base to move into higher-value scenarios while competitors catch up later on the basics with less effort.

Leave a comment