Salesforce AI’s new MCP-Universe benchmark puts frontier models through 200+ real-world tool-use tasks. The results: GPT-5 lands at 43.7%, Grok-4 at 33.3%, and Claude-Sonnet at 29.4%.

The rest of this post breaks down why these numbers are so much lower than BFCL, what domains drag models down most, and what the findings mean for teams wiring MCP into their platforms.

TLDR:

- Frontier models underperform: GPT‑5 tops out at 43.72% success, Grok‑4 at 33.33%, and Claude‑4.0‑Sonnet at 29.44%, while the best open‑source model reaches 24.68% (details in the paper).

- Failures are driven by three core challenges:

- long contexts that balloon across multi‑step tool use,

- unfamiliar/underspecified tool interfaces that trigger API misuse (the “unknown‑tools” problem), and

- distraction from large sets of unrelated tools.

- Simple mitigations help inconsistently:

- per‑step summarization and a pre‑task “exploration” phase yield domain and model‑specific gains but no universal lift.

- Models generally follow formats well but falter on content correctness, especially on dynamic, time‑sensitive tasks.

- Domain difficulty varies sharply (location navigation is uniformly hard; GPT‑5 fares best in finance and 3D).

- Agent architecture matters: o3 with OpenAI Agent SDK outperforms o3 with ReAct;

- Links:

Takeaways for teams integrating MCP:

- Limit Tool Exposure: Avoid exposing LLMs to overly large or noisy tool environments. Curate and scope tool sets to minimize “cognitive” load and improve selection accuracy.

- Orchestration Design Matters: Design orchestration layers that guide LLMs toward relevant tools. Consider SDK-level constraints or routing logic to reduce ambiguity.

- Platform Implications: Integration strategies should account for tool density and relevance filtering. Explore tooling levers that help LLMs navigate complex tool ecosystems more effectively (constrain and route the toolset, tighten tool interfaces, shape returned data, long context growth, standardize errors, etc.)

But BFCL shows the frontier models at more than 70% accuracy?!

Berkeley Function Calling Leaderboard (BFCL) has frontier LLMs like GPT-4.5, Claude-Opus-4, and Claude-Sonnet clearing around 70% overall accuracy, so a natural question is what’s different with MCP-Universe causing the numbers to be much lower (e.g., GPT-4.5 is 70.85 on BFCL but 24.68 on MCP-Universe).

Crux is that MCP-Universe is wired into real MCP servers and long contexts, while BFCL is scoring on a curated, static dataset.

MCP-Universe leans on multi-step reasoning where small errors can snowball.

The large number of unrelated tools in MCP-Universe (to mimic real-world messiness) is another factor.

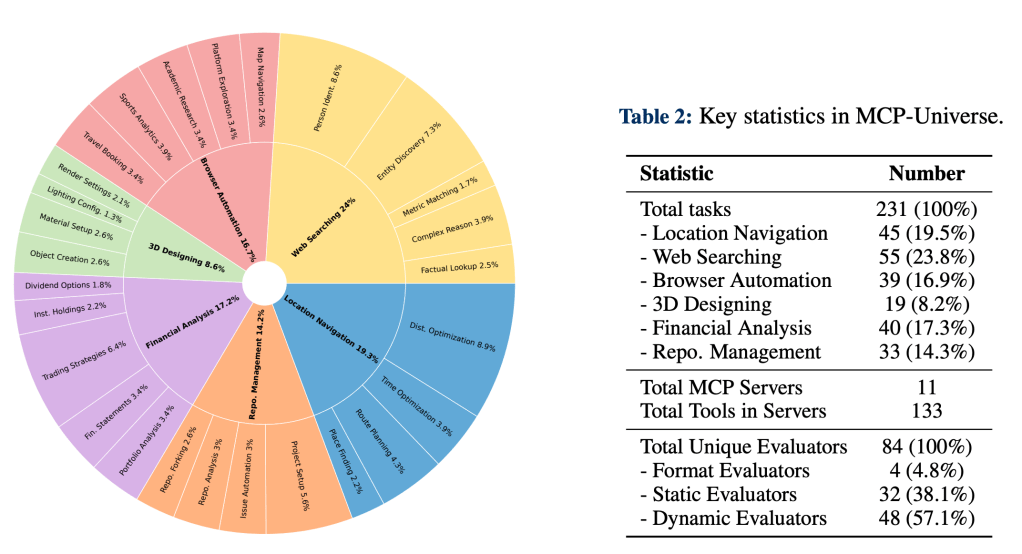

What domains did they test on?

See chart below:

Example of a task:

So, what does this mean?

You get what you measure. Now that MCP-Universe is showing frontier LLMs struggling, the developers behind those models have a clear target to chase. Expect the accuracy of real-world MCP tool calls to climb fast in the coming months.

Leave a comment