Everyone’s excited about MCP and I love it. But, just because you can expose every API endpoint as a tool doesn’t mean you should.

This is something Tom Laird-McConnell and I discussed when OpenAPI based plugins were all the rage, and it stuck with me: APIs and user actions are rarely a clean 1:1 mapping.

Take the famous PetStore Swagger. It’s enough to make my point. It has a findById endpoint (GET /pet/{petId}), but what if your UX needs to find a pet by name? Classic scenario: the backend team hasn’t shipped what the frontend needs — at least, not yet.

The data model for Pet has a name, but there’s no API to find pets by name!

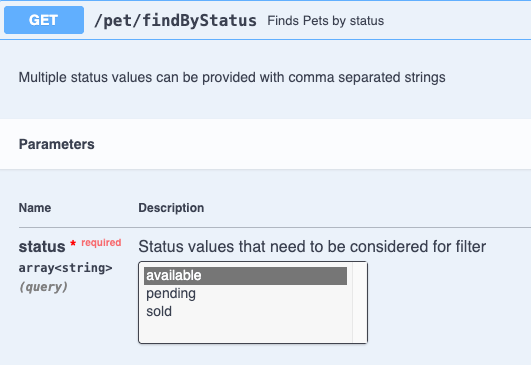

Ah – sure, you point out, there’s an API to find pets by status!

And, once we have a set of pets by status, we can find matches based on a name.

So, now in your app you’re building a drop-down to pick a status (available/pending/sold), pulling pets with that status, and writing client logic to find pets by name.

That glue logic? That’s your app acting as the abstraction layer over raw APIs, deciding what’s allowed, what needs user confirmation, and what to hide altogether.

Now, you might say:

But – wait, why not just expose all our APIs as MCP tools? LLMs can handle it, right? They can synthesize the “glue logic” reasoning on the fly.

Well, LLMs get confused with more tools to reason over. See the best-practices on function-calling from OpenAI. They recommend sticking to fewer than 20 functions in a completion (that’s what MCP tools get translated into for the LLM to choose from).

Keep the number of functions small for higher accuracy.

- Evaluate your performance with different numbers of functions.

- Aim for fewer than 20 functions at any one time, though this is just a soft suggestion.

So yes, it’s tempting to auto-generate tools from every endpoint. But resist that urge.

Exposing everything leads to drop in LLM tool call accuracy.

So, what’s the takeaway? Curate. Ship a purposeful set of tools in your MCP Server — ones that map directly to how users will get things done.

Notes:

- When OpenAPI spec based plugins were the hype, we hit this same issue. That’s when I had the conversation with Tom. The lesson then, and now with MCP, is this: you must decide the business logic you want to expose, built on top of whatever underlying APIs exist.

- I will revisit this article as the OpenAI guidelines for function-calling change, based on enhancements in underlying model capabilities.

- There are several benchmarks tracking LLM tool calling capabilities – e.g., Berkeley Function Calling Leaderboard, Toolbench, etc.

Leave a comment