Friday night, I had this thought: If we fed raw observations into AI, could it discover the hidden laws of physics – just like Newton pieced together gravity from centuries of data?

Because, if AI could truly discover a fundamental law on its own, wouldn’t that be a scientific breakthrough?!

So, I decided to built a model that predicts gravitational force purely from masses and distances — no formulas, no explicit laws, just learned behavior.

Attempt #1: Neural Networks

I started with deep learning, training a neural network to predict force from raw data.

❌ No success

- The neural network fit the data, but it didn’t generalize the inverse-square law.

- Instead of discovering 1 / r^2, it found weird approximations, like F = r^0.58.

- The model memorized correlations instead of deriving a real equation.

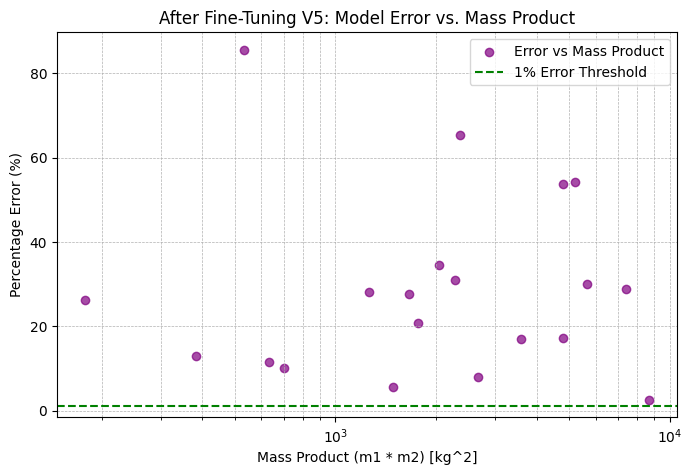

This chart shows how the model’s prediction error varies with the product of the two masses (m1 * m2) after fine-tuning.

- Each purple dot represents a test case, with mass product on the x-axis and percentage error on the y-axis.

- The green dashed line represents the 1% error threshold, showing where we ideally want all predictions to be.

- Some test cases have very low error (close to 0%), while others show high errors exceeding 80%, especially for specific mass ranges.

The model didn’t fully generalize Newton’s Law, possibly learning an approximate trend instead of the exact equation.

Tried various approaches, but to no avail:

- Experimented with different architectures and activation functions.

- Tried using log-transformed inputs to make power laws easier to learn.

- Fine-tuned hyperparameters and trained longer to improve accuracy.

So, I went in a different direction (might revisit this approach in the future though).

Attempt #2: Symbolic Regression with Genetic Programming

Instead of training a black-box model, I switched to symbolic regression – a type of AI that evolves mathematical equations over time. It searches for the actual equation that best fits the data.

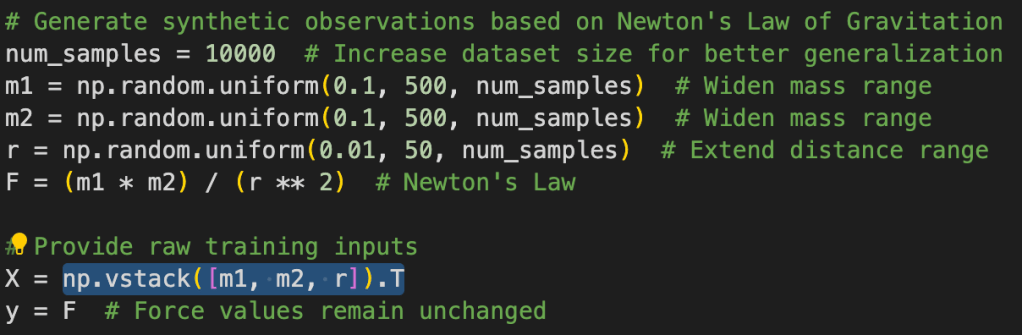

To make sure AI had only the rawest possible inputs, it was only given

- Mass values (m1,m2) randomly generated between 1 and 100 kg

- Distance values (r) randomly chosen between 0.05 and 10 meters

- Force values (F) computed using Newton’s Law: m1 * m2 / r^2

The AI was not told that this data followed an inverse-square law. Its only job? Find the best equation that fits the numbers.

Symbolic regression works through genetic programming, meaning the AI:

Starts with completely random equations like:

F = 4640.513It tests how well each equation predicts force values. Equations that perform better are kept, and bad ones are discarded.

It mutates and evolves new equations by:

- Changing constants

- Swapping operators (+, -, *, /, ^)

- Combining the best parts of two different equations (crossover)

It repeats this process for hundreds of generations.

At some point it found the relationship with distance:

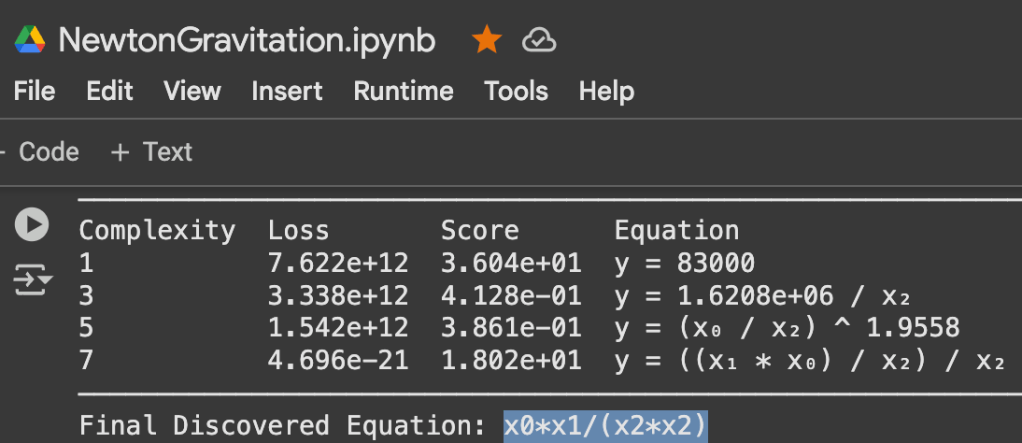

F = r^-4.68It realized that mass plays a role, and finally it arrived at Newton’s Law:

F = (m1 / r^2) * m2The AI independently found the fundamental equation of gravity!

You can check out the Google Colab of the experiment here – NewtonGravitation. I have saved the run where it arrives at Newton’s Law of Gravitation so you can see for yourself.

Scroll up to the code-block to see that x0, x1, and x2 are m1, m2 and r.

What does this all mean?

Instead of making some grandiose statements, let me pose a set of questions.

We fed raw observational data into AI and let it discover a fundamental law of physics – no predefined equations, just relationships emerging from data.

But what happens when AI is applied to real experimental data, where the governing law isn’t known?

Would AI be able to detect patterns that humans missed?

What if the discovered equation didn’t match observations?

- Could it mean new physics—hidden variables or undiscovered forces?

- Could it be like when Neptune was discovered, after Uranus’s orbit didn’t match Newtonian predictions?

- Could AI flag anomalies the way we realized galaxies rotate “too fast,” leading to dark matter theories?

Imagine if Einstein hadn’t come along…

- Would we have blindly applied Newton’s Laws to black holes and gravitational lensing, missing deeper insights?

- Would AI have flagged the inconsistencies in Mercury’s orbit, hinting at relativity before Einstein?

- Could AI have recognized that Newton’s Law breaks down at high velocities and strong gravitational fields—pointing us toward relativity?

That’s the real power of AI in scientific discovery.

Not just finding equations, but helping us realize when those equations stop working.

From Randomness to Reasoning

The genetic algorithm behind symbolic regression is powerful – but blind. It mutates equations randomly, hoping to stumble onto the right answer. But what if we could steer it? That’s where reasoning comes in. By adding LLM-driven analysis, we can guide the search intelligently, cutting through randomness and converging on the right equation faster.

🔹 Meet ReasoningSymbolicRegressor

The AI that thinks and searches for equations.

Traditional symbolic regression finds patterns in data, but it lacks reasoning.ReasoningSymbolicRegressor changes that by combining PySR with LLM-driven analysis, allowing it to do:

✅ LLM-Guided Exploration: Dynamically adjusts search parameters using AI reasoning.

✅ Self-Repairing Feedback: Detects errors in PySR configurations and prompts LLM to correct them.

✅ Iterative Refinement: Improves equations over multiple guided cycles.

✅ Early Stopping: Terminates when the LLM determines the correct equation has been found.

👉 Try it now with:

pip install reasoning-symbolic-regressor

Check out the GitHub repo of the project. Hope it lights a spark of some sort and gets you thinking about the possibilities.

https://github.com/SidU/ReasoningSymbolicRegressor

Leave a comment